The Development of a Standardised Multiprofessional Paediatric In-Situ Simulation Programme Based on a National Standards Framework: A Multi-Centered Approach

- Corresponding Author:

- Dr Kate O’Loughlin

Department of Paediatrics

Medway Maritime Hospital

Windmill Road, Gillingham Kent ME7 5NY, London, UK

Tel: 01634 830000

E-mail: Katharine.o’loughlin@nhs.net

Abstract

Aim: ASPiH (2016) produced a standards framework to guide the delivery of high quality simulation in healthcare. In 2017, ASPiH will use these standards as a platform to develop guidelines for individual and institutional accreditation. The Multiprofessional Paediatric in situ Simulation (MPIS) programme is the first programme developed from the ASPiH standards and aimed to drive multi-professional learning initiatives across a regional network.

Methods: A regional scoping study was conducted to assess the delivery of MPIS. Semistructured interviews and questionnaires were conducted with Simulation Educators using questions based upon two main categories defined within the ASPiH standards framework: Faculty and Activity. The findings of the scoping study underpinned the development of driver diagrams and the creation of the MPIS programme. The MPIS programme was piloted across 8 hospital sites, delivering 15 simulation sessions to 74 participants. It was evaluated using a 14 item questionnaire assessing participants’ perceptions that simulation supported multi-professional learning pre and post participation in the MPIS programme.

Results: Multi-professional learning was not supported, with a lack of relevant and well defined learning objectives for professional groups other than trainee doctors across the network; this justified the need to develop the MPIS programme. Evaluation using Paired T Test statistical analysis demonstrated significant improved perception that simulation supported multiprofessional learning following participation in the MPIS programme (t(73)=8.51, p<0.001). Further ANOVA subset analysis highlighted increased perception of learning for both doctors and nurses (F (1,72)=71.0, p<0.001) to the same degree (F (1,72)=0.11, p=0.74).

Conclusion: Our MPIS programme supports multi-professional simulation learning initiatives and is valued by both doctors and nurses. Our programme is the first programme to be developed from the ASPiH standards framework and if endorsed nationally, the MPIS programme could lay the foundations for ASPiH’s accreditation and ensure high quality delivery of in-situ simulation.

Keywords

Multi-professional learning, Simulated inter-professional team training

What this Paper Adds?

What is already known on this subject?

• The benefits of multi-professional in-situ simulation are well documented with the potential for inter-professional learning, improvements in team-working, and increases in confidence and competence in dealing with critical events; there remain constraints to wider adoption of in-situ simulation nationally

• Diversity in national practice of delivery of simulation based education (SBE) has been discovered, with a majority of simulation based educators reporting that SBE does not support inter-professional learning

• ASPiH produced a standards framework to guide healthcare practitioners in their delivery of SBE, inform the development of national SBE standards and drive future multi-professional training initiatives

What this Paper Adds?

• In this paper we describe the first in-situ simulation programme to be developed based on the ASPiH standards framework.

• Our study demonstrates participant perception of simulation supporting multiprofessional learning and development significantly improved following participation in this programme.

• A standardised approach to in-situ simulation may enhance national collaborative work in simulation research, quality assurance, training and education.

Introduction

Modernisation and healthcare reform require a flexible and skilled workforce, sharing common goals and values, with collaboration at the core of healthcare delivery [1-4]. It is well recognised by policy makers, healthcare professionals and educators that bringing multi-professional groups together for learning improves collaboration and working relationships, ultimately improving the delivery of healthcare [1-10 ]. The UK Department of Health (2014) highlighted Simulation Based Education (SBE) as a means of training the workforce to deliver safer patient care [10].

The use of simulation to promote multiprofessional training is well established within paediatrics [11-19], with trainee doctors and nurses the most common professional groups participating in simulation [10]. Benefits of simulation training, whereby simulation takes place in the actual clinical environment are well documented, including promoting interprofessional learning, increasing team confidence and competence in dealing with critical events [11-12,14-16]. Simulation participants also report enjoyment and satisfaction [19,20], and as simulation occurs with teams in their workplace it has greater fidelity and enables the opportunity to identify and address latent errors [21].

Multidisciplinary in-situ simulation programmes have been successfully embedded within clinical practice to support interprofessional learning and enhance team working [14]. One such programme is the Simulated Interprofessional Team Training (SPRiNT) programme, implemented within a London Paediatric Intensive Care Unit (PICU), which has demonstrably improved working relationships and interprofessional learning [14].

Despite the benefits of in-situ simulation as a training tool, there are a number of constraints to its wider adoption at a national level. These include funding, availability and expertise of faculty and educators, release from clinical environment, time to develop simulation resources and poor buy in from key stakeholders [10].

In 2014, the Association for Simulated Practice in Healthcare (ASPiH) in conjunction with Health Education England (HEE) and Health Education Authority (HEA) conducted a national scoping project on the delivery of SBE in the UK [10]. Nationally, there was diverse SBE practice, with a disconnect between nursing and medical teaching. 62% of Simulation Educators (SE) reported that simulation did not support inter-professional learning, with only 26% of SE having received specific training for the delivery of SBE. Such diverse national practice highlighted the need for a more robust approach to quality assurance and the development of national guidance for the practice of SBE. Subsequently, ASPiH [10] produced a standards framework to guide healthcare practitioners in their delivery of SBE, inform the development of national SBE standards and, drive future multiprofessional training initiatives [22].

ASPiH [10] conducted a national consultation of their proposed standards and further produced final revised standards framework,which incorporates best practice from published evidence and professional bodies including the General Medical Council (GMC), Nursing and Midwifery Council (NMC), General Pharmaceutical Council (GPhC) and Health and Care Professions Council (HCPC) [22]. In 2017 ASPiH will draft an accreditation process whereby these standards can be used to facilitate self and institutional evaluation against best practice standards outlined in the framework [22].

In this paper we describe the first in-situ simulation programme to be developed based on the ASPiH [14] standards framework [22]. The Multi-professional Paediatric in-situ Simulation (MPIS) Programme piloted and implemented across our regional network, demonstrates benefits to multi-professional learning and provides a standardised structured approach that could support future ASPiH professional and institutional accreditation.

Method

Design and delivery of MPIS comprised 5 phases (Table 1).

| Phase | Action | Description |

|---|---|---|

| 1 | Research | Regional scoping project across the UCLPartners network |

| 2 | Design | Design and development of the MPIS Programme using the ASPiH (2016) standards framework |

| 3 | Pilot | Pilot the MPIS programme across selected UCLPartner sites |

| 4 | Analysis | Analysis and evaluation of the MPIS programme |

| 5 | Implementation | Develop strategies for the regional implementation of MPIS |

Table 1: The phases of the design and delivery for the MPIS programme.

▪ Phase 1: Research

A regional scoping project across the UCL Partners network was conducted to assess current pediatric simulation training provision.

The inclusion criteria for the regional scoping project were hospitals identified as 1) members of the UCLPartners network, 2) located within the North East and North Central London area and, 3) providing training and education to paediatric trainee doctors commissioned through HEE.

SE across the network were identified through a contact list provided by UCLPartners and invited by email to take part in semi-structured interviews and questionnaires, with questions based upon two main categories defined within the ASPiH standards framework: Faculty and Activity.

▪ Phase 2: Design

The results of this scoping project informed a needs analysis with driver diagrams based on ASPiH standards framework recommendations. This underpinned creation of the five MPIS programme components and outputs (Table 2).

| MPIS component |

ASPiH category |

ASPiH recommendation |

MPIS Output |

|---|---|---|---|

| Environment |

Faculty Faculty |

Faculty ensures that a safe learning environment is maintained for learners and encourages reflection Faculty highlight elements of the simulation that relate to the learning objectives |

Pre-simulation checklist Realistic representation of healthcare professionals facilitating Realistic representation of healthcare professionals participating Faculty pre-briefing |

| Faculty |

Faculty |

Faculty undergone training in SBE |

Train the trainers course and SBE workshops offered to simulation leads |

| MPIS Activity |

Activity Activity Activity Activity Activity |

Learning principles should be developed in alignment with learning needs of participants in the programme Learning principles should be developed in alignment with formal curriculum mapping or learning/training needs analysis undertaken in clinical or educational practice Learning incorporates a human factors approach Latent errors should be graded using appropriate systems such as the NPSA risk matrix to quantify the threat to patient safety. Close collaboration should be established between In Situ Simulation training team and the parent unit |

Simulation scenarios mapped to learning objectives for nurses Simulation scenarios mapped to RCPCH curriculum for paediatric trainees Simulation scenarios mapped to crisis resource management principles Development of latent error identification and risk stratification form All latent errors identified documented with an action plan All paediatric in situ simulation conducted within the parent unit |

| Debriefing |

Faculty Faculty Faculty Activity |

The phases of debriefing should include reaction, analysis and summary Debriefing should be conducted in a safe environment Facilitators should engage with continuing professional development with regular evaluation of performance by participants and faculty A multidisciplinary approach to evaluating team interactions must be undertaken |

Facilitators provided a structured debriefing approach Physical space assigned for debriefing separate to simulation area OSAD tool provided for peer review and quality assurance Realistic representation of healthcare professionals both delivering and participating in debrief |

| Evaluation |

Activity Activity |

A formal evaluation by the candidates at the end of each session should be undertaken and fed back to improve the activity Reviews of programme content are undertaken to ensure that content matches appropriate values set in GMC/NMC guidelines and those of relevant professional bodies |

Standardised participant evaluation form Participants provided with NMC revalidation form to map learning outcomes to the NMC professional body Paediatric trainees provided with work based assessments to map leaning objectives to ARCP |

Table 2: The development of the MPIS programmes components and outputs underpinned by the relevant ASPiH (2016) standards framework category and recommendation.

To promote multiprofessional learning, integral to the design of the MPIS programme was the development of learning objectives specific to nurses and paediatric trainees. Simulation scenarios were designed with learning objectives mapped to Royal College of Paediatrics and Child Health (RCPCH) General Paediatric and Paediatric Emergency Medicine curriculum for Level 2 and 3 paediatric trainees. In the absence of a counterpart post-graduate nursing curriculum, five clinical practice nurse educators were recruited to develop consensus on defined nurse learning objectives. In addition, all scenarios were mapped according to 13 crisis resource management (CRM) principles (see Appendix 1 for sample scenario).

▪ Phase 3: Pilot

The inclusion criteria for the pilot phase were hospitals recruited to the regional scoping project, multidisciplinary faculty (at least one doctor and other healthcare professional facilitating the delivery and debrief of the simulation), multiprofessional participation (at least one paediatric trainee and other healthcare professional participating in the simulation and debrief) and delivery of the simulation using standardised scenarios.

Multiprofessional involvement of faculty and participants was considered key to promote buy-in from different professional groups; with current literature suggesting that simulation led by doctors lends itself to targeting primarily the learning needs of doctors at the exclusion of other professional groups [23].

Multiprofessional participants were selected within the clinical working environment on an ad hoc basis. Participants were given a simulation handbook outlining the MPIS programme and were informed of the purpose of the simulation to promote teamwork and identify latent errors. A pre-simulation checklist was used to promote a safe environment and ensure confidentiality. In addition, this was used to ensure clear learning objectives, realistic representation of faculty and participants and familiarisation with simulation equipment prior to the simulation session (see Appendix 2)

Multiprofessional faculty delivered the simulation using the standardised simulation scenarios and debriefing was conducted using a structured Describe, Analyse and Apply approach. The standardised Objective Structured Assessment of Debriefing (OSAD) tool [24] was introduced and recommended for peer review and quality assurance of debriefing practices.

A latent error identification form with risk stratification based on the National Patient Safety Agency (NPSA) risk matrix was used to highlight latent errors in the clinical environment [21]. This engaged multi-professional participants and faculty to develop action plans to address latent errors and improve patient safety.

To promote continuing professional development, participants were given a certificate of attendance. The NMC revalidation form was provided to nurses and work based assessments offered to paediatric trainees to support professional revalidation. Learning outcomes were disseminated to participants after the simulation session.

▪ Phase 4: Analysis

The effectiveness of this programme in promoting multiprofessional learning was evaluated using a 14 item questionnaire comprising five point Likert scale (scale 1-5) pre and post participation in MPIS, with a total possible score of 14-70 (see Appendix 3). Items were summed and analysed as total scores, following which individual item analysis was performed to investigate which items indicated most change. Paired t-tests and mixed ANOVAs were used to analyse the change.

▪ Phase 5: Implementation

Implementation of MPIS is ongoing throughout Phase 5 with key local stakeholders involved and multidisciplinary teams engaged in supporting the regular delivery of MPIS.

Results

▪ Phase 1 Result: Research

Eleven hospital sites qualified to take part in the regional scoping project. 88 SE were identified across the network, 51% of whom were doctors (n=45) and 49% from other healthcare professional background (n=43). In total 28 SE participated in semi-structured interviews, of which 17 completed the questionnaire.

Overall our regional scoping project demonstrated findings similar to the ASPiH (2014) national scoping project. Across the network, nurses and trainee doctors were the largest users of in-situ simulation (Figure 1). There were common challenges identified in the effective delivery of SBE with only 4 sites having a multi-professional faculty delivering regular paediatric in-situ simulations. Provision of SBE was delivered on the notion of good will; despite 10 sites demonstrating trained faculty for the delivery of simulation only five incorporated SBE into a formal job plan for SE.

The findings of the scoping project are presented from the combined feedback from the semistructured interviews and questionnaires. The key findings that emerged from the interviews are illustrated with verbatim quotes (the code letter suffixed to each quotation refers to the profession of the SE, i.e., doctor (D) and other healthcare professional (N)).

▪ Multi-professional delivery of in-situ simulation

Multi-professional learning through in-situ simulation was not supported, with a lack of relevant and well defined learning objectives for professional groups other than trainee doctors. 8 sites did not have learning objectives for nurses whereas all sites had learning objectives for doctors. Of the SE completing the questionnaire only 29% (n=5) were involved in developing nursing learning objectives for SBE.

SE highlighted the importance of multiprofessional learning objectives to support multiprofessional engagement and learning, whilst recognising the problems associated with in-situ simulation being doctor focused and led.

“To engage paediatric nurses it is important to develop clinically relevant simulation scenarios to their specific learning needs.” (N1)

“There is a need for equal ratios of nurses to doctors in paediatric simulation and inter-professional learning is key to the delivery of multi-professional simulation.” (N2)

▪ Faculty

SE reported that their simulation faculty were trained in the delivery of simulation and debriefing with the Train the Trainers course or through membership to the London School of Paediatrics Faculty Development Programme (n=16). Despite having received such training all identified a need for advanced debriefing courses. Moreover, it was highlighted that faculty members who were from healthcare professional backgrounds other than doctors were not formally recognised or trained in the delivery of simulation.

“There are no formal multidisciplinary professionals who are a part of a faculty delivering simulation. There are no nursing leads and nurse educators are not trained in simulation.” (D1)

▪ Debriefing

65% of the SE identified a structured approach to debriefing in their delivery of SBE (n=11). However, many of the trained debriefers were not engaged in peer review for quality assurance (n=14) with an expressed need to promote quality assurance of debriefing across the network.

“Although currently there is no evaluation of the quality of our debriefing, I would be interested in establishing peer review of debriefing using the OSAD tool.” (N3)

Furthermore, SE emphasised the importance of co-debriefing with equal representation of doctors and nurses delivering the debrief in order to promote multi-professional learning to support participant professional development and create a safe learning environment.

“Co-debriefing with nurse and doctor facilitators is required to promote inter-professional learning and team engagement.” (N2)

“It is important for the nurses to feel engaged in the debriefing, co-debriefing has been adopted” (D2)

▪ Collaborative working

Supporting delivery of SBE was not encouraged through collaboration in research, education and training, with all SE highlighting the need to share simulation resources and good practice. All sites were involved in developing simulation resources including simulation scenarios, with only five SE sharing their resources within the UCLPartners network. Furthermore, the majority of SE (n=13) are developing simulation scenarios from critical incidents but these resources and learning outcomes were not regionally shared.

“There is a need for equity of training for faculty and access to simulation equipment and resources.” (D1)

▪ Challenges to the delivery of multiprofessional in situ simulation

Semi-structured interviews revealed general themes on common barriers to effective delivery of multi-professional in-situ simulation. These included lack of formal recognition and remuneration for SE, lack of multi-professional focus, high clinical job demands and staffing pressures.

“There have been difficulties with multidisciplinary team involvement in simulation. The reasons are staffing pressures, time constraints and no formally identified multi-professional simulation leads. Currently nurses do not facilitate with simulation and it is primarily doctor led.” (D3)

“The perceived factors that prevent multidisciplinary engagement include the learning objectives not being met for the non-clinical team.” (N3)

“There is poor buy in from other consultants, who do not have this as part of their job plan. Those who do deliver simulation training are doing so based on good will, so the frequency and quality of the delivery of simulation is variable.” (D2)

▪ Results of phase 3: Pilot

During the pilot of MPIS, 15 simulation sessions were delivered to 75 participants, 74 (doctor n=35, nurse n=39) of whom completed pre and post questionnaires. Questionnaire responses were analysed using a Paired T Test to assess whether participant perception of multiprofessional simulation significantly improved post MPIS participation. Internal consistency was assessed at both time points to assess whether it was statistically valid to combine items into a total score. Cronbach’s Alpha was 0.99 at time 1 and 0.89 at time 2 suggesting excellent cohesiveness within the tool.

Overall, perception of simulation improved significantly following participation in MPIS (t (73)=8.51, p<0.001) from a mean of 46.8 (SD=7.6) to 54.7 (SD=5.3). When analysis was broken down into individual questionnaire items, participant perception of simulation supporting multi-professional learning and development significantly improved. Using a Bonferroni correction for multiple comparisons, significance was set at p=0.003 (0.05/14). There was significantly improved perception post participation in MPIS on all items except enhanced team working skills, clinical knowledge and technical skills (Table 3).

| Item | Question | Pre-MPIS Mean (SD) | Post-MPIS Mean (SD) |

Statistics |

|---|---|---|---|---|

| 1 | Simulation provides specific learning objectives relevant to my profession | 4.4 (0.85) | 4.9 (0.34) | t(73)=-4.7, p<0.001 |

| 2 | There is equal representation of doctors and other healthcare professionals | 3.05 (1.17) | 4.32 (1.00) | t(73)=-7.3, p<0.001 |

| 3 | There are equal learning opportunities for doctors and other healthcare professionals during debriefing | 3.55 (1.10) | 4.57 (0.62) | t(73)=-7.7, p<0.001 |

| 4 | Debriefing is facilitated jointly by doctors and other healthcare professionals | 3.31 (1.20) | 4.68 (0.58) | t(73)=-9.8, p<0.001 |

| 5 | It is easy to contribute to debriefing | 4.07 (0.73) | 4.65 (0.51) | t(73)=-5.3, p<0.001 |

| 6 | Debriefing offers a safe and non-judgmental learning environment | 4.03 (0.81) | 4.70 (0.49) | t (73)=-6.4, p<0.001 |

| 7 | Simulation offers a safe learning environment | 4.43 (0.76) | 4.80 (0.47) | t (73)=-3.9, p<0.001 |

| 8 | There are feedback tools available for my professional revalidation | 3.47 (1.09) | 4.41 (0.92) | t (73)=-6.8, p<0.001 |

| 9 | There are opportunities available to be involved in developing learning outcome for my profession | 3.59 (1.03) | 4.34 (0.78) | t (73)=-5.5, p<0.001 |

| 10 | Simulation develops my team working skills | 4.35 (0.81) | 4.58 (0.60) | t (73)=-2.2, p=0.03 |

| 11 | Simulation develops my clinical knowledge | 4.39 (0.76) | 4.64 (0.56) | t (73)=-2.5, p=0.15 |

| 12 | Simulation develops my technical skills | 4.09 (0.91) | 4.26 (0.89) | t (73)=-1.2, p=0.25 |

| 13 | Simulation has the potential to identify errors within the clinical environment | 4.41 (0.70) | 4.72 (0.48) | t (73)=-3.5, p=0.001 |

| 14 | Simulation benefits patient care and safety | 4.53 (7.60) | 4.82 (0.38) | t (73)=-3.7, p<0.001 |

Table 3: Paired T test statistical analysis for each 14 items pre and post MPIS questionnaire responses.

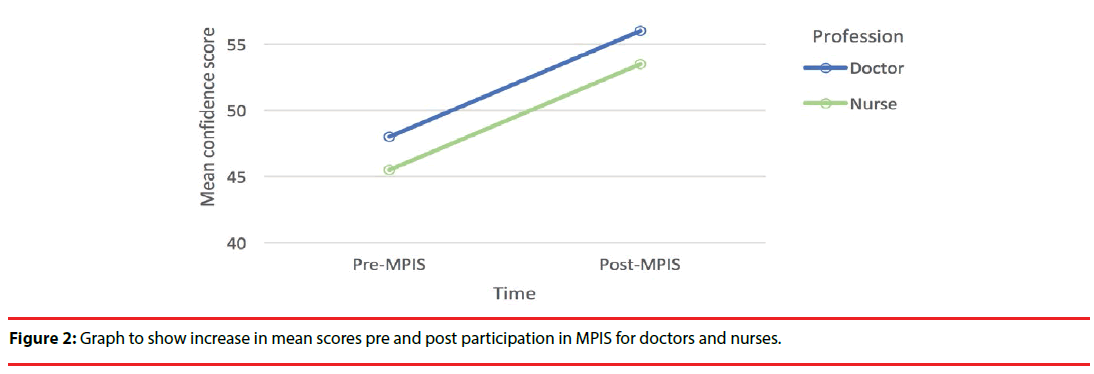

A mixed ANOVA subset analysis was performed to assess whether there was a significant difference in perception between professional groups (doctors and nurses) following participation in MPIS. ANOVA demonstrated significantly improved perceptions for both groups (main effect of time: F (1, 72)=71.0, p<0.001) with no interaction effect, suggesting that both professional groups’ perceptions improved to the same degree (F (1, 72)=0.11, p=0.74) (Figure 2).

▪ Latent error identification and risk stratification

Participants in the MPIS programme along with faculty categorized latent errors into four categories: Training, Education, Medication and Equipment along with a subjective risk assessment based on the NPSA risk matrix. Of 15 in-situ simulations delivered, 10 revealed 18 latent errors, the majority categorized as low (n=7) and high risk (n=8). The most common type of error was within the category of training (n=8) (Table 4).

| Type of Error | Frequency (Total n=18) |

|---|---|

| Training | 8 |

| Environment | 5 |

| Medication | 2 |

| Equipment | 3 |

| Level of Risk | Frequency (Total n=18) |

| Low | 7 |

| Moderate | 3 |

| High | 8 |

Table 4: Latent Error Identification and Risk Stratification.

Discussion

The National Scoping Project conducted by ASPiH [10] confirmed national variance in our delivery of SBE in healthcare [10], leading to the creation of a national standards framework to guide healthcare practitioners and SE in their delivery of high quality simulation [22]. This is the first multi-professional simulation programme to be created from the ASPiH standards framework and piloted at a regional level. It is unique in its standardised delivery of simulation scenarios that have both multi and uni-professional specific learning objectives.

The regional scoping project demonstrated variation in paediatric in-situ simulation across our network that is comparable to the ASPiH national scoping project [10]. Key findings suggest that multi-professional learning was not supported, with a disconnect between collaborative SBE initiatives for doctors and nurses evidenced by a paucity of learning objectives available for nurses compared to doctors.

SE highlighted that although faculty are trained in the delivery of simulation and debriefing, few are participating in peer review for quality assurance of their debriefing and simulation practices. Moreover, there is a need for collaboration between SE to share resources, engage in research and education initiatives and disseminate learning across our network. Subsequently, these findings reinforced the need to develop a standardised MPIS programme based on the ASPiH standards framework [22], with the aim to deliver universal regional training opportunities that promote multiprofessional learning for healthcare professionals who encounter acutely unwell children.

Overall, MPIS demonstrated a positive impact on participants’ perception of enhanced multiprofessional learning through simulation. MPIS provides a structure to deliver multi-professional learning through simulation that is well received and valued by both doctors and nurses.

Standardisation of simulation scenarios with learning objectives mapped to paediatric trainee, nurse and human factors learning is unique and promotes not only the delivery of multiprofessional learning but could also be used to deliver simulation training for uni-professional learning, when focusing on profession specific learning outcomes.

MPIS not only promoted shared learning but actively engaged multi-professionals’ in highlighting latent errors within their clinical environment, encouraging professionals to form a subjective risk assessment and develop future solutions to address latent errors, aiming to ultimately improve patient safety. Latent errors were commonly identified within the category of Training and related to CRM principles. This finding supports the need for investment in the future expansion of SBE initiatives that drive multi-professional collaboration and focus on CRM learning to reduce harm and enhance patient safety. It is proposed that our standardised MPIS programme offers a successful means of achieving this. While designing the MPIS programme a number of standardised simulation resources were developed, the programme also provided support to continuing professional development for nurses and paediatric trainees. Work based assessments and NMC revalidation were encouraged based on learning and reflection from the simulation activity. Standardised resources could be used to support collaborative SBE initiatives and serve to promote quality assurance in our delivery of simulation at a regional and national level.

This study is not without its limitations. 88 SE were identified across the network with only one third participating in the regional scoping project. The SE was therefore self-selected; selection bias could have plausibly affected the accuracy of the results. However, despite possible selection bias there was consistency in SE feedback, with variance in the delivery of paediatric in-situ simulation across our regional network that was comparable to national findings [10]. This informed the need to develop a standardised MPIS programme.

The development of MPIS aimed to promote multi-professional learning through engaging healthcare professionals from different backgrounds in paediatric in-situ simulation. However, the participants involved in piloting the MPIS programme comprised only doctors and nurses. The participant requirements were purposely not pre-specified in order to maintain realism and accurately capture in-situ simulation practices. This finding is interesting in itself because the delivery of MPIS does not appear to engage healthcare professionals from wider healthcare professional backgrounds. It is possible that by designing simulation scenarios that preferentially support the learning of nurses and doctors, other professional groups were unintentionally excluded from participation in the MPIS programme. However, it could be argued that multi-professional learning was supported through the generic application of CRM learning objectives with trainee doctors and nurses the largest users of SBE.

Our pilot study focused on participant perception of the benefits of learning through in-situ simulation based on previous experience of simulation and subsequent experience of the MPIS programme. This study did not establish whether previous simulation experience of the participants had a multi or uni-professional focus and therefore caution should be taken in drawing comparative conclusions.

Overall, there was improved participant perception of learning after participating in the MPIS programme without statistically significant perceived improvement in the areas of enhanced team working, technical skills and clinical knowledge. An explanation for this could be that participants’ change in perception was related to their experience as a learning initiative; with already well-established benefits of how simulation can impact teamwork, clinical and technical knowledge. The scenarios were designed to focus on learning objectives relevant to nurses and paediatric trainees and were not designed to develop technical skills per se. Through the development of CRM learning objectives, the aim was to enhance multi-professional learning to ultimately improve team working and patient care. It is possible that the design of learning objectives relevant to each professional group may serve to promote individual professional learning without direct correlation to increased perception of improved team working as a whole. Inter-professional learning, whereby healthcare professionals learn from, with and about each other is beneficial to improving team working [5-9]. The results of this project cannot postulate whether inter-professional learning occurred and it is therefore possible that multi-professional learning was supported in parallel between doctors and nurses without the depth that inter-professional learning lends itself to. Further research needs to be undertaken that focuses on developing strategies for inter-professional learning through SBE and assessing the impact these have upon team working within the clinical setting.

Despite these limitations, this study is unique in its ability to establish a regional standardised MPIS programme underpinned by the ASPiH standards framework. This programme has shown to be successful in promoting multi-professional learning; equally enhancing the perceived value of learning through simulation for both doctors and nurses. If endorsed nationally, the delivery of simulation using the MPIS framework could lay the foundations for ASPiH’s professional and institutional accreditation and serve to ensure high quality delivery of SBE in healthcare. A standardised approach to simulation lends further benefits to national collaborative work in simulation research, quality assurance, training and education. It is recommended that future work should involve piloting the MPIS programme and simulation resources nationally alongside the ASPiH standards and future accreditation frameworks [22] to continue to inform and improve the delivery of SBE in healthcare. For now, anticipated challenges within our regional network are how to sustain and support clinical teams in their regular delivery of the MPIS programme. Further work needs to be undertaken to sustain these high standards in our delivery of MPIS through the standardisation of debriefing practices, use of OSAD for peer reviewed quality assurance, increased collaborative initiatives and further development of the MPIS programme to support future ASPiH professional accreditation.

Contributorship Statement

Dr Kate O’Loughlin: Responsible for the research concept, study design and delivery of the MPIS programme and piloting. Involved in data collection and interpretation of data. Preparing and writing the manuscript for publication with final approval of the version to be published and accountable for the entirety of the work.

Dr Jane Runnacles: Contributed to the research design and delivery of the study. Acted as a supervisor throughout the research. Involved in interpretation of the data and contributing to preparing and approving the manuscript for publication. Accountable for the all aspects of the work

Ms Valerie Dimmock: Contributed to the research design and delivery of the study. Acted as a supervisor throughout the research. Involved in interpretation of the data and contributing to preparing and approving the manuscript for publication. Accountable for the all aspects of the work

Professor Nicola Botting: Contributed to the research design and delivery of the study. Acted as the lead for data analysis and interpretation, in addition to preparing and approving the manuscript for publication. Accountable for the all aspects of the work

Ms Rosemary Lanlehin: Contributed to the design of the research, involved in literature searches and data preparation, analysis and interpretation. Contributed to preparing and approving the manuscript for publication. Accountable for all aspects of the work

Dr. Shye Wong: Contributed to the research design and delivery of the study. Acted as a supervisor throughout the research. Involved in interpretation of the data and contributing to preparing and approving the manuscript for publication. Accountable for the all aspects of the work

Ms. Lydia Lofton: Contributed to the research design and delivery of the study. Acted as a supervisor throughout the research. Involved in interpretation of the data and contributing to preparing and approving the manuscript for publication. Accountable for the all aspects of the work.

Funding Statement

This research received no specific grant from any funding agency in the public, commercial or not-for-profit sectors.

The clinical fellowship post was funded by Health Education England North Central and East London, the service evaluation work undertaken as part of this project, was indirectly funded through this post.

Competing Interests Statement

There are no competing interests for publication.

References

- Frenk J, Chen L, Bhutta Z,et al.Health professionals for a new century: transforming education to strengthen health systems in an interdependent world. The. Lancet376(9756):1923-1958 (2010)

- The Scottish Government. Better health, better care: planning tomorrow’s workforce today. 2007. Accessed at www.gov.scot on Monday 13th February 2017.

- GabaD. The future vision of simulation in healthcare. Qual.Saf. Health. Care2(2),126-135 (2007).

- World Health Organisation. Framework for action on inter-professional education and collaborative practice. 2010. Accessed on Monday 13th February 2017.

- Reeves S, Zwarenstein M, Goldman J,et al. The effectiveness of inter-professional education: key findings from a new systematic review. J. Interprof. Care24(3),230-241 (2010).

- Barr H. Competent to collaborate: towards a competency-based model for inter-professional education. J. Interprof. Care12(2),181–188(1998).

- Barr H. An anatomy of continuing interprofessional education. J. Contin. Educ. Health. Prof29(3),147-150 (2009).

- HammickM,Freeth D, Koppel I, et al. A best evidence systematic review of interprofessional education. Med. Teach29(8),735-751 (2007).

- Thistlethwaite J. Interprofessional education: a review of context, learning and the research agenda. Med.Educ2012; 46(1),58-70.

- ASPiH. The national simulation development project:- summary report. Accessed on Monday 13th February (2014).

- Allan CK, Thiagarajan RR, Beke D,et al.Simulation-based training delivered directly to the pediatric cardiac intensive care unit engenders preparedness, comfort, and decrease anxiety among multidisciplinary resuscitation teams. J ThoracCardiovascSurg140:646–652 (2010).

- Grant DJ, Marriage SC. Training using medical simulation. Arch. Dis. Child97(3), 255-259 (2012).

- Shaw NJ, Gottstein R. Trainee outcomes after the Mersey and north-west ‘pre-ST4’ neonatal simulation course. Arch. Dis. Child98(11), 921-922(2013).

- Stocker M, Allen M, Pool N,et al. Impact of an embedded simulation team training programme in a paediatric intensive care unit: a prospective, single-centre longitudinal study. Intensive. Care. Med38(1), 99-104 (2012).

- Dowson A, Russ S, Sevdalis N,et al.How in situ simulation affects paediatric nurses’ clinical confidence. Br.J. Nurs22(11), 610-617 (2013).

- Lopreiato JO, Sawyer T.Simulation-based medical education in paediatrics. Acad.Pediatr15(2),134-142 (2015).

- Mikrogianakas A, Osmond MH, Shephard A,et al. Evaluation of a multidisciplinary pediatric trauma code initiative: a pilot study. J. Trauma64(3),761-767 (2008).

- Ellis D, Hobbs G, Turner DA. Multidisciplinary trauma simulation for the general paediatrician. Med. Educ45(11), 1156-1157 (2011).

- Osman A. Undergraduate inter-professional paediatric simulation in a district general hospital. Med.Edu48(5), 527-528 (2014).

- Allan E, Lovell B, Murch N. Participants’ perceptions of inter-professional simulation training in the UK. BMJ Simulation & Technology Enhanced Learning 1(1), P0071 (2014).

- Lok A, Peirce E, Shore H,et al.A proactive approach to harm prevention: identifying latent risks through in situ simulation training. Patient. Saf11(5), 160-163 (2015).

- ASPiH. Simulation-based education in healthcare. Standards framework and guidance. 2016. Accessed on Monday 13th February 2017.

- Salas E, Klein C, King H,et al.Debriefing medical teams: 12 evidence-based best practices and tips. Jt. Comm. J. Qual. Patient. Saf34(9), 518-527 (2008).

- Runnacles J, Thomas L, Sevdalis N,et al.Development of a tool to improve performance debriefing and learning: the paediatric Objective Structured Assessment of Debriefing (OSAD) tool.Postgrad. Med. J90(1069), 613-621 (2014).